技术分享

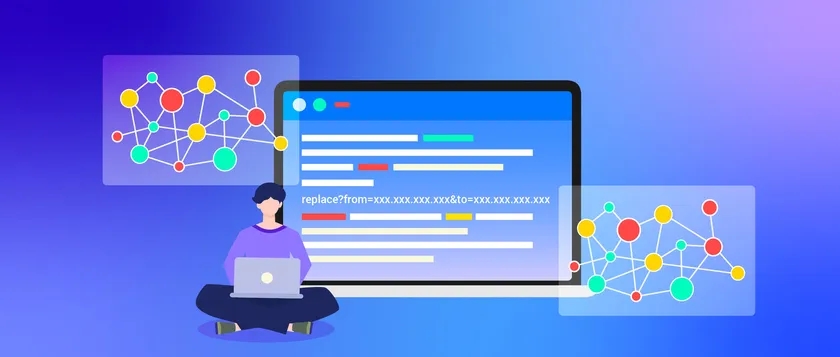

实践分享|基于内核 2.x 和 3.x 的可行 IP 替换方案

在 NebulaGraph 的论坛上,有帖子教你如何《在 ip 变更之后保持原有的集群服务正常运行》。但这个帖子还是偏理论的,在本文一线工程师带来他的实操以及踩坑分享,带你进一步走进生产环境来实现 IP 的更换。

⚠️ 注意:虽然本文是实操经验分享,但是修改生产环境是个高危操作,如果你对相关服务不了解情况下不建议按照本文实践。此外,操作之前请再三备份数据。

背景信息

当你的集群节点的 IP 要更换时,可以通过以下步骤实现 IP 替换,并不需要重新导数据。

本文以三节点集群为例,将原先的机器 IP:192.168.16.1、192.168.16.2、192.168.16.3,更换为新的地址 IP:196.168.16.17、192.168.16.18、192.168.16.19。

此外,本文还提供将原先服务 IP:Port 地址变更为 Hostname:Port 的形式,在这里用域名 new-node1、new-node2、new-node3 来表示。

具体实践步骤

更换机器的 IP 需要经过下面的步骤:

- 变更 IP 信息:

- 更新所有节点参数文件的老 IP 到新地址

- 启动 meta 和 graph 服务

- 集群 meta 数据变更

- 查看新集群 meta leader 节点

- 修改 meta 中的 storage 信息

- 调整集群 storage 服务

- 每台 storage 删除 cluster.id

- 启动所有 storage 服务

- 添加新 hosts 删除老 hosts

- 结果验证

- 检查服务是否正常

变更 IP 信息

用 sed 命令修改安装目录下 etc 子目录下以 **conf **结尾的文件,下面的 220 表示老 IP,new-node 表示新的域名。 IP 换 IP 的,请在 etc 目录下分别执行以下 3 条命令:

$ sed -i '/s/192\.168\.16\.1/192\.168\.16\.17/g' *.conf

$ sed -i '/s/192\.168\.16\.2/192\.168\.16\.18/g' *.conf

$ sed -i '/s/192\.168\.16\.3/192\.168\.16\.19/g' *.conf

IP 换域名的,请在etc目录下分别执行以下 3 条命令:

$ sed -i '/s/192\.168\.16\.1/new-node1/g' *.conf

$ sed -i '/s/192\.168\.16\.2/new-node2/g' *.conf

$ sed -i '/s/192\.168\.16\.3/new-node3/g' *.conf

这里说下采用域名形式的好处,如果你采用域名形式来访问服务,下次更换 IP 的时候只需要替换机器 hosts 文件就好,不需要做额外操作了!!!

采用 IP 换域名形式的小伙伴记得修改下每台机器的 hosts 文件:

$ vi /etc/hosts

196.168.16.17 new-node1

196.168.16.18 new-node2

196.168.16.19 new-node3

一切稳妥之后,IP 换 IP 或者 IP 换域名的小伙伴来启动下 meta 和 graph 服务,以便进行后续的 meta 信息替换工作。

$ /usr/local/nebula/scripts/nebula.service start metad

$ /usr/local/nebula/scripts/nebula.service start graphd

集群 meta 信息变更

因为 meta 信息替换需要从 meta leader 开始,这里我们先查看下新集群 meta leader 节点:

如果你新的 IP 表达是 IP 则会看到下面的结果:

$ ./nebula-console3.0 -address 192.168.16.17 -port 9669 -u root -p nebula

Welcome to Nebula Graph!

(root@nebula) [(none)]> show meta leader

+--------------------------+---------------------------+

| Meta Leader | secs from last heart beat |

+--------------------------+---------------------------+

| "192.168.16.18:9559" | 4 |

+--------------------------+---------------------------+

Got 1 rows (time spent 282/871 us)

如果你的新的 IP 表达是域名 hostsname 形式,则可以看到下面的结果:

$ ./nebula-console3.0 -address "new-node1" -port 9669 -u root -p nebula

Welcome to Nebula Graph!

(root@nebula) [(none)]> show meta leader

+--------------------------+---------------------------+

| Meta Leader | secs from last heart beat |

+--------------------------+---------------------------+

| "new-node2:9559" | 4 |

+--------------------------+---------------------------+

Got 1 rows (time spent 282/871 us)

下面,最重要的一步:修改 meta 中的 storage 信息。

IP 换 IP 参考下面步骤:

这里的 192.168.16.18 为上一步查到的 meta leader 节点,from 和 to 参数分别对应老 IP 和新 IP。

如果返回“Replace Host in partition successfully”即为成功。

curl -G "http://192.168.16.18:19559/replace?from=192.168.16.1:9779&to=192.168.16.17:9779"

curl -G "http://192.168.16.18:19559/replace?from=192.168.16.2:9779&to=192.168.16.18:9779"

curl -G "http://192.168.16.18:19559/replace?from=192.168.16.3:9779&to=192.168.16.19:9779"

IP 换域名参考下面步骤:

这里的 new-node2 为上一步查到的 meta leader 节点,from 和 to 参数分别对应老 IP 和新地址。

如果返回“Replace Host in partition successfully”即为成功。

curl -G "http://new-node2:19559/replace?from=192.168.16.1:9779&to=new-node1:9779"

curl -G "http://new-node2:19559/replace?from=192.168.16.2:9779&to=new-node2:9779"

curl -G "http://new-node2:19559/replace?from=192.168.16.3:9779&to=new-node3:9779"

⚠️ 注意:如果你用的 Nebula 内核版本为 3.x 版本, from&to 地址后面要跟端口号 port;如果你用的内核版本是 2.x,则不需要跟端口号。

踩坑血泪史

这里说个之前实践时踩过的坑:在内核 3.x 版本里,上面 from&to 的端口一定不能写错。如果写错会导致 meta 里 partition 的信息中的主机 host replace 失败,storaged 判断老的 space 不存在,就会自动清除所有数据!!!

日志信息如下:

I20240402 19:15:08, 243983 98979 NebulaStore.cpp:96] Start the raft service. ..

120240202 19:15:08, 249043 98979 NebulaSnapshotManager.cpp:25] Send snapshot is rate limited to 10485760 for each part by default

I20240402 19:15:08, 252173 98979 RaftexService.cpp:47] Start raft service on 9780

I20240402 19:15:08, 252661 98979 NebulaStore.cpp:108] Register handler..

I20240402 19:15:08, 252676 98979 NebulaStore.cpp:136] Scan the local path, and init the spaces_

I20240402 19:15:08, 252732 98979 NebulaStore.cpp:144] Scan data path "/data1/nebula/nebula/0"

I20240402 19:15:08, 252741 98979 NebulaStore.cpp:144] Scan data path "/data1/nebula/nebula/1"

I20240402 19:15:08, 252750 98979 NebulaStore.cpp:163] Remove outdated space 1 in path /data1/nebula/nebula/1

I20240402 19:15:08, 252756 98979 NebulaStore.cpp:888] Try to remove directory: /data1/nebula/nebula/1

I20240402 19:15:51, 447275 98979 NebulaStore.cpp:890] Directory removed: /data1/nebula/nebula/1

I20240402 19:15:51, 447345 98979 NebulaStore.cpp:144] Scan data path "/data2/nebula/nebula/1"

I20240402 19:15:51, 447361 98979 NebulaStore.cpp:163] Remove outdated space 1 in path /data2/nebula/nebula/1

I20240402 19:15:51, 447367 98979 NebulaStore.cpp:888] Try to remove directory: /data2/nebula/nebula/1

I20240402 19:16:35, 420459 98979 NebulaStore.cpp:890] Directory removed: /data2/nebula/nebula/1

I20240402 19:16:35, 420529 98979 NebulaStore.cpp:144] Scan data path "/data3/nebula/nebula/1"

I20240402 19:16:35, 420545 98979 NebulaStore.cpp:163] Remove outdated space 1 in path /data3/nebula/nebula/1

I20240402 19:16:35, 420552 98979 NebulaStore.cpp:888] Try to remove directory: /data3/nebula/nebula/1

I20240402 19:17:21, 259413 98979 NebulaStore.cpp:890] Directory removed: /data3/nebula/nebula/1

I20240402 19:17:21, 259485 98979 NebulaStore.cpp:144] Scan data path "/data4/nebula/nebula/1"

I20240402 19:17:21, 259500 98979 NebulaStore.cpp:163] Remove outdated space 1 in path /data4/nebula/nebula/1

I20240402 19:17:21, 259506 98979 NebulaStore.cpp:888] Try to remove directory: /data4/nebula/nebula/1

I20240402 19:18:08, 185812 98979 NebulaStore.cpp:890] Directory removed: /data4/nebula/nebula/1

I20240402 19:18:08, 185892 98979 NebulaStore.cpp:144] Scan data path "/data5/nebula/nebula/1"

I20240402 19:18:08, 185909 98979 NebulaStore.cpp:163] Remove outdated space 1 in path /data5/nebula/nebula/1

126240402 19:18:08, 185015 98879 NebulaStore.cpp:888] Try to remove directory: /data5/nebula/nebula/1

I20240402 19:18:55, 286984 98979 NebulaStore.cpp:890] Directory removed: /data5/nebula/nebula/1

I20240402 19:18:55, 287062 98979 NebulaStore.cpp:144] Scan data path "/data6/nebula/nebula/1"

I20240402 19:18:55, 287079 98979 NebulaStore.cpp:163] Remove outdated space 1 in path /data6/nebula/nebula/1

I20240402 19:18:55, 287086 98979 NebulaStore.cpp:888] Try to remove directory: /datab/nebula/nebula/1

I20240402 19:19:49, 058575 98979 NebulaStore.cpp:890] Directory removed: /data6/nebula/nebula/1

I20240402 19:19:49, 058643 98979 NebulaStore.cpp:311] Init data from partManager for "new-node1":9779

I20240402 19:19:49, 058679 98979 StorageServer.cpp:278] Init LogMonitor

I20240402 19:19:49, 058822 98979 StorageServer.cpp:130] Starting Storage HTTP Service

I20240402 19:19:49, 059319 98979 StorageServer.cpp:134] Http Thread Pool started

I20240402 19:19:49, 062161 99318 WebService.cpp:130] Web service started on HTTP[19779]

I20240402 19:19:49, 082093 98979 RocksEngineConfig.cpp:386] Emplace rocksdb option max_background_jobs=64

120240402 19:19:49, 082144 98979 RocksEngineConfig.cpp:386] Emplace rocksdb option max_subcompactions=64

126240402 19:19:49, 082334 98979 RocksEngineConfig.cpp:286] Emplace rocksdb option max_bytes_for_level_base=268435456

120240402 19:19:49, 082346 98979 RocksEngineConfig.cpp:386] Emplace rocksdb option max_write_buffer_number=4

I20240402 19:19:49, 082352 98979 RocksEngineConfig.cpp:386] Emplace rocksdb option write_buffer_size=67108864

I20240402 19:19:49, 082358 98979 RocksEngineConfig.cpp:386] Emplace rocksdb option disable_auto_compaction=false

120240402 19:19:49, 082590 98979 RocksEngineConfig.cpp:386] Emplace rocksdb option block_size=8192

120240402 19:19:49, 120257 60324 EventListener.h:21] Rocksdb start compaction column family: default because of LevelL0FilesNum, status: Ok, compacted 4 files into 0.

I20240402 19:19:49, 120302 98979 RocksEngine.cpp:117] open rocksdb on /data1/nebula/nebula/0/0/data

120240402 19:19:49, 120452 96979 AdminTaskManager.cpp:22] max concurrent subtasks: 10

注意上面各种 remove 的操作,它是在删除数据啊!!!所以一定要确保端口正确。最后,操作前可以将 auto_remove_invalid_space 参数关闭,万一误操作也不会清数据!

调整集群 storage 服务

下面,我们来删除下 cluster.id 信息。前往每台 storage 机器,删除其 cluster.id 文件:

$ cd /usr/local/nebula

$ rm -rf cluster.id

全部删除完之后,启动所有 storage 服务:

$ /usr/local/nebula/scripts/nebula.service start storaged

这步操作如果你的内核是 2.x 版本,请跳过(2.x 不需要额外执行 ADD HOSTS)。

添加新的 storage hosts 删除老的 storage hosts。

IP 换 IP,参考:

#添加

$ ./nebula-console3.0 -address 196.168.16.17 -port 9669 -u root -p nebula

Welcome to Nebula Graph!

(root@nebula) [(none)]> add hosts 196.168.16.17:9779

Execution succeeded (time spent 13720/14409 us)

(root@nebula) [(none)]> add hosts 196.168.16.18:9779

Execution succeeded (time spent 13720/14409 us)

(root@nebula) [(none)]> add hosts 196.168.16.19:9779

Execution succeeded (time spent 13720/14409 us)

#删除

(root@nebula) [(none)]> drop hosts 192.168.16.1:9779

Execution succeeded (time spent 13720/14409 us)

root@nebula) [(none)]> drop hosts 192.168.16.2:9779

Execution succeeded (time spent 13720/14409 us)

root@nebula) [(none)]> drop hosts 192.168.16.3:9779

Execution succeeded (time spent 13720/14409 us)

IP 换域名,请确保执行下面命令所在的机器的 hosts 文件配有域名和 IP 映射。参考:

#添加

$ ./nebula-console3.0 -address "new-node1" -port 9669 -u root -p nebula

Welcome to Nebula Graph!

(root@nebula) [(none)]> add hosts "new-node1":9779

Execution succeeded (time spent 13720/14409 us)

(root@nebula) [(none)]> add hosts "new-node2":9779

Execution succeeded (time spent 13720/14409 us)

(root@nebula) [(none)]> add hosts "new-node3":9779

Execution succeeded (time spent 13720/14409 us)

#删除

(root@nebula) [(none)]> drop hosts 192.168.16.1:9779

Execution succeeded (time spent 13720/14409 us)

root@nebula) [(none)]> drop hosts 192.168.16.2:9779

Execution succeeded (time spent 13720/14409 us)

root@nebula) [(none)]> drop hosts 192.168.16.3:9779

Execution succeeded (time spent 13720/14409 us)

最后,检查服务是否正常:

(root@nebula) [(none)]> show hosts storage

+----------------+------+-----------+-----------+--------------+-------------+

| Host | Port | Status | Role | Git Info Sha | Version |

+----------------+------+-----------+-----------+--------------+-------------+

| "new-node1" | 9779 | "ONLINE" | "STORAGE" | "b062493" | 3.6.0. |

| "new-node2" | 9779 | "ONLINE" | "STORAGE" | "b062493" | 3.6.0. |

| "new-node3" | 9779 | "ONLINE" | "STORAGE" | "b062493" | 3.6.0. |

+----------------+------+-----------+-----------+--------------+-------------+

Got 3 rows (time spent 817/1421 us)

此方案还可用来做 snapshot 迁移数据,可以在源端创建 snapshot 后拷贝到目标端,后续按照以上替换 IP 步骤来操作。

作者:Henry

校对 & 编辑:清蒸